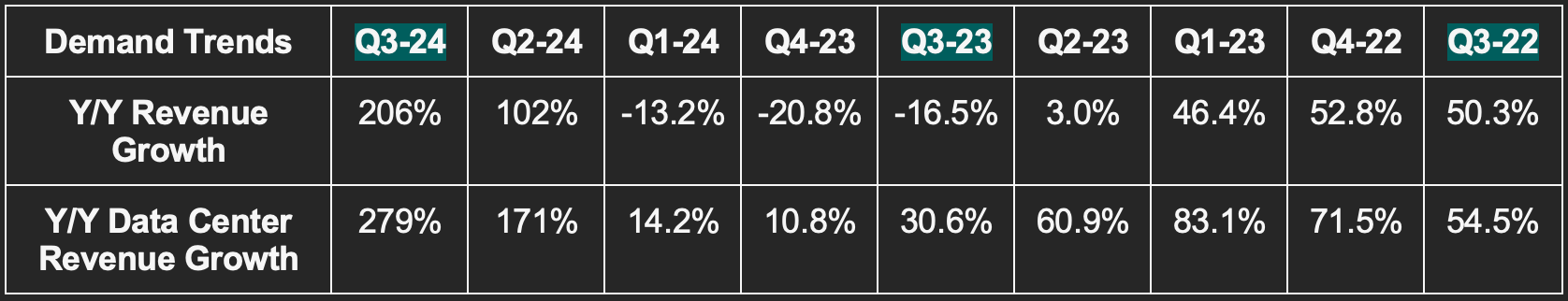

Demand

- Beat revenue estimates by 12.3% and beat its guidance by 13.1%.

- The firm’s 56.4% 3-year compounded annual growth rate (CAGR) compares to 51.7% as of last quarter and 32.7% as of 2 quarters ago.

- Data Center is the revenue bucket that Gen AI’s proliferation supports.

Profitability

- Beat EBIT estimates by 18.7% & beat guidance by 20.4%.

- Beat GAAP EBIT guidance by 22.7%.

- Beat $3.40 earnings per share (EPS) estimates by $0.61 & beat guidance by $0.70.

- Beat 71.5% GAAP gross profit margin (GPM) guide by 250 basis points (bps; 1 bps = 0.01%).

- Beat 72.5% GPM guide by 250 bps.

Fiscal Q4 2024 Guidance

- Beat revenue estimate by 12.4%.

- Beat non-GAAP EBIT estimate by 19.4%.

- Beat non-GAAP net income estimate by 19.3%.

- Beat 74.7% non-GAAP GPM estimate by 80 bps.

Balance Sheet

- $18.3 billion in cash & equivalents. Strong cash collections led to a decline in accounts receivable.

- $9.7 billion in debt with $1.25 billion being current (due in the next 12 months).

- $4.78 billion in inventory with purchase commitments for $17.1 billion.

- $6.87 billion in year-to-date buybacks vs. $8.83 billion Y/Y.

- Share count fell slightly Y/Y. It’s still hiring aggressively to meet demand and that means more stock comp. Considering these results, that’s something I think shareholders (which I am not) should be entirely fine with.

Call & Release Highlights

Important Definitions & Acronyms

- GPU: Graphics Processing Unit. This is an electronic circuit to display screen images.

- CPU: Central Processing Unit. This is a different type of electronic circuit that carries out tasks/assignments and data processing from applications.

- DGX: Nvidia’s full stack platform combining its chipsets and software services.

- Hopper: Nvidia’s new GPU architecture designed for accelerated compute and Generative AI use cases. Key piece of the DGX platform.

- H100: Its Hopper 100 Chip.

- L40S: Another, more barebones GPU chipset based on Ada Lovelace architecture. This works best for less complex needs.

- Ampere: The GPU architecture that Hopper replaces for a 16x performance boost.

- Grace: Nvidia’s new CPU architecture designed for accelerated compute and Generative AI use cases. Key piece of the DGX platform.

- GH200: Its Grace Hopper 200 Superchip with Nvidia GPUs and ARM Holdings CPUs.

- InfiniBand: Interconnect tech providing an ultra-low latency computing network.

- NeMo: Guided step-functions to build granular Gen AI models for client-specific needs.

- Generative AI Model Training: One of two key layers to model development. This seasons a model by feeding it specific data.

- Generative AI Model Inference: The second key layer to model development. This pushes trained models to create new insights and uncover new, related patterns. It connects data dots that we didn’t realize were related. Training comes first. Inference comes second.

Data Center Going Gangbusters

The Data Center explosion is being supported by H100 architecture and the coinciding Gen AI boom. Data center computing demand rose by a whopping 324% Y/Y powered by broad DGX growth. Demand was evenly split between cloud service providers (CSPs) and large consumer internet players like Meta.

H100 efficiently scales better than all competitors; this is key given the voracious data consumption of models and Gen AI apps. That claim is according to independent research benchmarks from MLPerf, not internal findings. Per leadership, the H100 “remains the top performing, most cost-effective and most versatile platform for AI training – by a wide margin.”

Newer Data Center Chips

Nvidia is releasing the next iteration of its H100 chips (shockingly called H200) which doubles model inference performance – thus cutting costs in half (or doubling the value of each chip).

Tensor Runtime (TensorRT) is Nvidia’s software database with models and tools to optimize performance. It just released a new Large Language Model (LLM) which doubled inference performance (so cut cost in half). NVDA just continues to constantly iterate to drive better efficiency and that will not change.

Initial shipments of Nvidia’s GH200 chip are now underway. While H100 is perfect for most model training and inference, the GH200 allows this to be done on a larger scale for those with the heftiest needs. It also creates more real-time data visualization and analytics on a larger scale vs. H100. Both are rapidly ramping into multi-billion dollar product lines at unprecedented paces.

“We will also have a broader and faster product launch cadence to meet a growing and diverse set of AI opportunities.” – CFO Colette Kress

Data Center Networking Revenue

Nvidia’s networking bucket is all revenue generated from technology allowing “products to communicate with one another.” It just crossed a $10 billion annual revenue run rate because its products are required for “gaining the performance needed to train models.” Azure uses 29,000 miles of NVDA InfiniBand connectivity cabling and virtually all other cloud service providers lean heavily on it as well. Networking revenue rose 155% Y/Y via strong InfiniBand infrastructure “to support data center demand.” Going forward, Nvidia plans to expand into Ethernet networking. It will release a new product (called Spectrum-X) next quarter with initial support from Dell, Hewlett-Packard and Lenovo. Spectrum-X delivers 60% better networking performance for AI communication vs. alternatives. This means lower cost. Better performance and lower cost is a consistent Nvidia product theme.

As an important aside, virtually every large cap tech consumer internet or cloud company uses Nvidia chips. Amazon, Microsoft, Google, Meta, Adobe, OpenAI, ServiceNow, Databricks, Snowflake. The list goes on, and on, and on. It powers ChatGPT, Microsoft CoPilot, Firefly, Now Assist, Meta AI etc. The company’s products are ubiquitous and virtually synonymous with AI.

Public Market Opportunity & Supercomputers

- Working in tandem with India’s government and tech giants there (Reliance, Tata, etc.) to “boost their sovereign AI infrastructure.”

- The U.K. government will use 24,000 GH200 superchips to build a new supercomputer.

- Initial chip shipments to The Swiss National Supercomputing Center started this quarter.

“National investment in computer capacity is a new economic imperative. Serving the sovereign AI infrastructure market represents a multibillion-dollar opportunity over the next few years.” – CFO Colette Kress

H100 Supply

Supply bottlenecks remain in place. This is sort of ridiculous to think about given the insane growth this company continues to post. Imagine what it would look like if it could fulfill all orders today. Supply constraints are easing but will take a few more quarters to fully disappear (assuming demand doesn’t ramp beyond expectations).

China Export Restrictions

The U.S. government will implement new export restrictions for chipsets that clear certain performance benchmarks. The countries involved include China, Saudi Arabia and a few others.

The new rules impact chipsets within its Ampere, Hopper (H100), Grace (GH200) and Ada LoveLace (L40S) architectures. Companies will be required to secure licensing before shipping these types of chips. The new rules are now in place and will start impacting Nvidia this quarter.

Over the last few quarters, China has represented 20%-25% of all data center revenue for Nvidia. It expects revenue from that nation to sharply drop going forward due to these rules. Fortunately, it believes that demand elsewhere will more than offset this blow. Remember, H100 remains materially supply constrained.

NVIDIA is working to procure the needed licensing to resume shipments but does not know when that will occur. Alternatively, it is working to ensure it has more supply of lower performance chips for these nations going forward. Notably, the rollout of one regulatory-compliant chipset for China has been delayed.

Gaming, Auto & Professional Visualization

Growth for Gaming and Professional Visualization was helped by normalization of channel inventory gluts earlier in the year.

Gross Margins

Gross margin was bolstered by a mix shift to data center revenue, outperforming revenue and lower inventory provisions Y/Y. Those provisions were from Ampere, not Grace or Hopper. Notably, provisions in last year’s quarter hit GPM by 1150 bps vs. just 240 bps this quarter. That was a material source of gross margin expansion. It wasn’t the only source as GPM expanded by 2040 bps Y/Y and this provisions comp tailwind only propped up GPM by 910 bps.

The explosion in revenue coincided with 16% Y/Y GAAP operating expense (OpEx) growth and 13% Y/Y OpEx growth. Crazy leverage.

Quick Notes on Software

Nvidia’s software and services revenue bucket will soon cross $1 billion in annual revenue. This includes DGX cloud services and its AI enterprise software tools. Genentech was announced as a new customer. It will use Nvidia’s BioNeMo LLM framework to accelerate drug discovery. NeMo is its standardized framework for building LLMs with BioNeMo being more specific to biotech use cases.

Nvidia’s deepening Azure partnership will now allow custom Gen AI apps built through Nvidia to be seamlessly run on Azure. Customers will be able to access any needed Azure data to build models most specific to their needs.

My Take

This is one of the best large cap quarters I’ve ever covered. Nvidia’s last quarter was the second best with its performance 2 quarters ago being the third best. Incredible performance and incredible execution paired with a best-in-class value prop are paving the way for some historic results. I’m not a shareholder, just an admirer.

For the sake of balance, I’d like to provide the two biggest risks to the bull case that I see. Clearly, those risks aren’t impacting the company in the slightest today, but they’re still worth noting.

The first is cyclicality. We’ve had supercycles before. Supercycles have led to crazy spikes in demand growth for semiconductor firms. Never this crazy, but crazy. The risk here is that supply dynamics normalize, competition begins to catch up (long way to go) and that growth reverts back to the ~only~ roughly 25%-30% multi-year CAGR we’ve previously seen. 30x earnings (where Nvidia trades) is actually very cheap when you’re growing those earnings 50% annually. It’s less cheap if leverage slows and growth dips back to multi-year trends. That will certainly happen eventually. The risk is that it happens sooner than some expect. I have no opinion here as I don’t know enough about the industry to have an opinion. But it’s a risk.

Secondly is China. It’s easy to replace 25% of data center demand when supply constraints remain in place and demand is racing. It’s harder to plug that gap in more normal times like the scenario that I’m describing above.

What isn’t a pressing risk here? The claims of fraud that some seem to love to make on social media. I call these people conspiracy theorists… and the conspiracy is not compelling in this case. All in all, this has been a perfect year for Nvidia. Take a bow Jensen… then take another bow. You called out this super-cycle years before the rest.